Last month, we lamented California’s Frontier AI Act of 2025. The Act favors compliance over risk management, while shielding bureaucrats and lawmakers from responsibility. Mostly, it imposes top-down regulatory norms, instead of letting civil society and industry experts experiment and develop ethical standards from the bottom up.

Perhaps we could dismiss the Act as just another example of California’s interventionist penchant. But some American politicians and regulators are already calling for the Act to be a “template for harmonizing federal and state oversight.” The other source for that template would be the European Union (EU), so it’s worth keeping an eye on the regulations spewed out of Brussels.

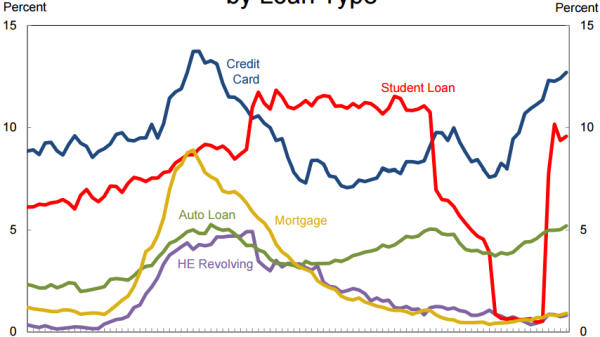

The EU is already way ahead of California in imposing troubling, top-down regulation. Indeed, the EU Artificial Intelligence Act of 2024 follows the EU’s overall precautionary principle. As the EU Parliament’s internal think tank explains, “the precautionary principle enables decision-makers to adopt precautionary measures when scientific evidence about an environmental or human health hazard is uncertain and the stakes are high.” The precautionary principle gives immense power to the EU when it comes to regulating in the face of uncertainty — rather than allowing for experimentation with the guardrails of fines and tort law (as in the US). It stifles ethical learning and innovation. Because of the precautionary principle and associated regulation, the EU economy suffers from greater market concentration, higher regulatory compliance costs, and diminished innovation — compared to an environment that allows for experimentation and sensible risk management. It is small wonder that only four of the world’s top 50 tech companies are European.

From Stifled Innovation to Stifled Privacy

Along with the precautionary principle, the second driving force behind EU regulation is the advancement of rights — but cherry-picking from the EU Charter of Fundamental Rights of rights that often conflict with others. For example, the EU’s General Data Protection Regulation (GDPR) of 2016 was imposed with the idea of protecting a fundamental right to personal data protection (this is technically separate from the right to privacy, and gives the EU much more power to intervene — but that is the stuff of academic journals). The GDPR ended up curtailing the right to economic freedom.

This time, fundamental rights are being deployed to justify the EU’s fight against child sexual abuse. We all love fundamental rights, and we all hate child abuse. But, over the years, fundamental rights have been deployed as a blunt and powerful weapon to expand the EU’s regulatory powers. The proposed Child Sex Abuse regulation (CSA) is no exception. What is exceptional, is the extent of the intrusion: the EU is proposing to monitor communications among European citizens, lumping them all together as potential threats rather than as protected speech that enjoys a prima facie right to privacy.

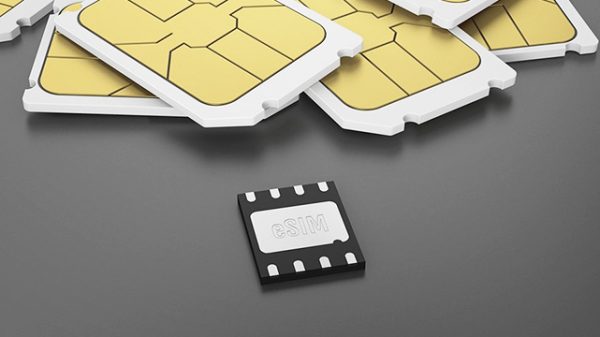

As of 26 November 2025, the EU bureaucratic machine has been negotiating the details of the CSA. In the latest draft, mandatory scanning of private communications has thankfully been removed, at least formally. But there is a catch. Providers of hosting and interpersonal communication services must identify, analyze, and assess how their services might be used for online child sexual abuse, and then take “all reasonable mitigation measures.” Faced with such an open-ended mandate and the threat of liability, many providers may conclude that the safest — and most legally prudent — way to show they have complied with the EU directive is to deploy large-scale scanning of private communications.

The draft CSA insists that mitigation measures should, where possible, be limited to specific parts of the service or specific groups of users. But the incentive structure points in one direction. Widespread monitoring may end up as the only viable option for regulatory compliance. What is presented as voluntary today risks becoming a de facto obligation tomorrow.

In the words of Peter Hummelgaard, the Danish Minister of Justice: “Every year, millions of files are shared that depict the sexual abuse of children. And behind every single image and video, there is a child who has been subjected to the most horrific and terrible abuse. This is completely unacceptable.” No one disputes the gravity or turpitude of the problem. And yet, under this narrative, the telecommunications industry and European citizens are expected to absorb dangerous risk-mitigation measures that are likely to involve lost privacy for citizens and widespread monitoring powers for the state.

The cost, we are told, is nothing compared to the benefit. After all, who wouldn’t want to fight child sexual abuse? It’s high time to take a deep breath. Child abusers should be punished severely. This does not dispense a free society from respecting other core values.

But, wait. There’s more…

Widespread Monitoring? Well, Not Completely Widespread

Despite the moral imperative of protecting children — a moral imperative so compelling that the EU is willing to violate other core values to advance it — the proposed CSA act introduces a convenient exception. Anything falling under national security, and any electronic communication service that is not publicly available (i.e. available only to elected officials and bureaucrats) would remain entirely untouched. Private chats among citizens require scrutiny — but the conversations of those who claim to protect us are off limits.

As the good minister said, “behind every single image and video there is a child who has been subjected to the most horrific and terrible abuse.” If that is indeed true of every “single image and video,” why would it not also be true of the messages shielded by the CSA’s national security and non-public exceptions? Does the horror somehow dissipate when the users are politicians or bureaucrats? Is the unacceptable suddenly made acceptable when it concerns those who write the rules?

In the EU’s hierarchy of rights, protecting children trumps privacy. But protecting Eurocrats trumps protecting children. In the end, modern technology gives politicians unprecedented opportunities to monitor citizens, while exempting themselves from scrutiny.

There is no chatter yet — that we know of — about imposing similar measures in the US. But, from the wealth tax to AI regulation — and the very origins of the American administrative state — bad ideas from Europe have a nasty way of making their way across the Pond.